Китайский ИИ-стартап DeepSeek презентовал новую большую языковую модель, которая, согласно тестам, превосходит аналоги от Meta и OpenAI.

🚀 Introducing DeepSeek-V3!

— DeepSeek (@deepseek_ai) December 26, 2024

Biggest leap forward yet:

⚡ 60 tokens/second (3x faster than V2!)

💪 Enhanced capabilities

🛠 API compatibility intact

🌍 Fully open-source models & papers

🐋 1/n pic.twitter.com/p1dV9gJ2Sd

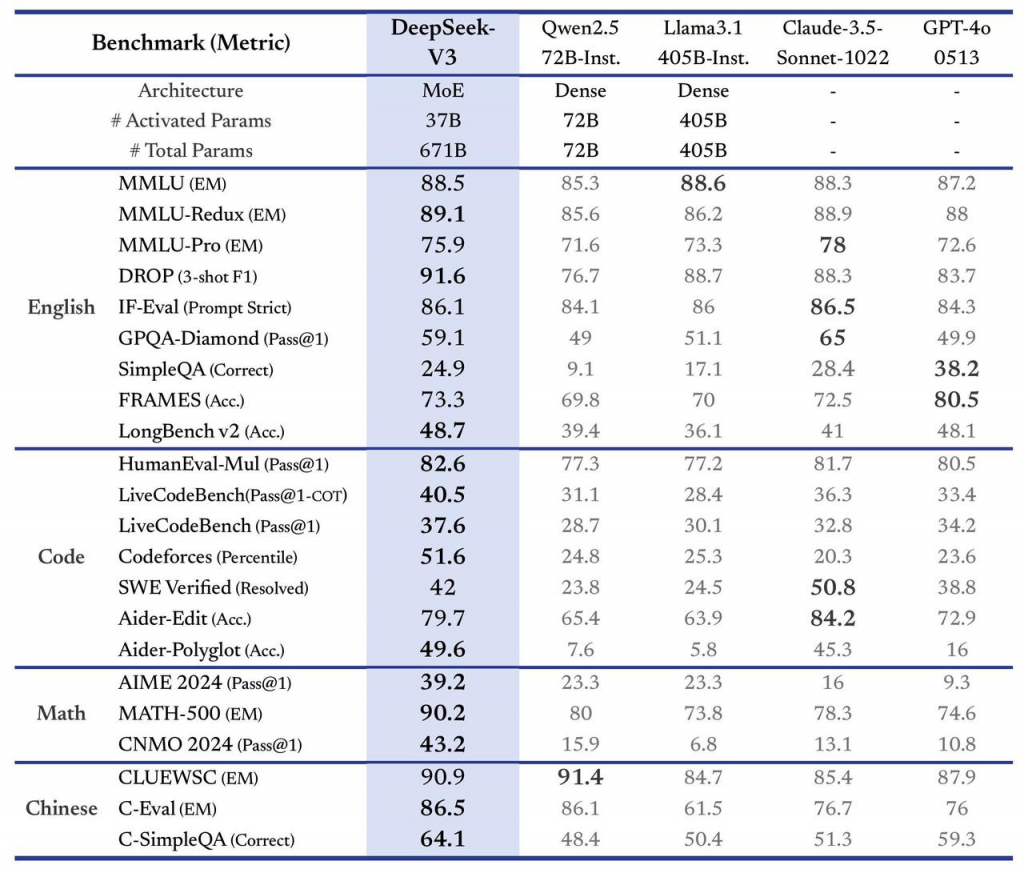

Модель DeepSeek V3 обладает 671 млрд параметров, что превышает 405 млрд у Llama 3.1. Это говорит о большей способности адаптироваться к сложным задачам и обеспечивать более точные ответы.

Компания из Ханчжоу обучила нейросеть за два месяца с бюджетом 5,58 млн долларов, используя всего 2048 графических процессоров. Это значительно меньше, чем обычно требуется крупным технологическим компаниям. DeepSeek обещает лучшее соотношение цена/качество на рынке.

🎉 What’s new in V3?

— DeepSeek (@deepseek_ai) December 26, 2024

🧠 671B MoE parameters

🚀 37B activated parameters

📚 Trained on 14.8T high-quality tokens

🔗 Dive deeper here:

Model 👉 https://t.co/9iwEF6aLuk

Paper 👉 https://t.co/ruzwMFYAAH

🐋 2/n

В будущем стартап планирует добавить мультимодальность и «другие передовые функции».

Член команды OpenAI Андрей Карпати назвал разработку DeepSeek впечатляющей, особенно в условиях ограниченных ресурсов.

DeepSeek (Chinese AI co) making it look easy today with an open weights release of a frontier-grade LLM trained on a joke of a budget (2048 GPUs for 2 months, $6M).

— Andrej Karpathy (@karpathy) December 26, 2024

For reference, this level of capability is supposed to require clusters of closer to 16K GPUs, the ones being… https://t.co/EW7q2pQ94B

«Это не означает, что большие кластеры GPU больше не нужны для создания продвинутых LLM. Но важно не растрачивать имеющиеся ресурсы. Этот проект демонстрирует, что многое еще можно улучшить как в данных, так и в алгоритмах», — отметил Карпати.

Ранее DeepSeek выпустила «конкурента o1 от OpenAI» — продвинутую «думающую» модель DeepSeek-R1-Lite-Preview.

Напомним, в июле китайская компания Kuaishou открыла доступ к своей ИИ-модели для генерации видео Kling.

Cryptol — новости о криптовалютах, информационных технологиях и децентрализованных решениях. Оставайтесь в курсе актуальных событий в мире цифровых технологий.

Cryptol — новости о криптовалютах, информационных технологиях и децентрализованных решениях. Оставайтесь в курсе актуальных событий в мире цифровых технологий.